Can prompting ChatGPT make us better communicators?

There are benefits to learning how to prompt AI tools like ChatGPT well. But some things can’t be replicated. What do we lose?

Originally published in print and online by The Straits Times on 10th September 2025

Most of us didn’t grow up thinking we’d spend a few hours every day talking to a robot.

But here we are, in 2025, and millions of us now write daily messages, not just to colleagues or friends, but to large language models (LLMs) with names like ChatGPT, Gemini and Claude. We use them to write tricky e-mails, draft CVs, clarify economic jargon, and sometimes, just to feel a little less alone.

In the early days, prompts were often vague or overly broad, such as “Tell me a fun fact”. And the tools matched that simplicity: early chatbots like Eliza responded with generic, surface-level replies that barely scratched the creative potential users were hoping for. But as the technology evolved into more powerful models like GPT-4 and other advanced LLMs, prompts became more nuanced, contextual and goal-oriented.

Today, the prompt is no longer just a tech skill, but a form of communication literacy.

It’s not about getting better answers, but asking better questions. It’s about figuring out what you really want to say, how to say it clearly, and anticipating how your words will land – on both human and machine ears. And in that sense, AI might not just be shaping our communication for us, it might be teaching us to be better communicators ourselves.

As a linguist, I can see how language has always evolved alongside our tools, from the printing press to the typewriter to SMS lingo. LLMs are simply the latest and most responsive addition.

But unlike the tools that came before, these talk back. And the way we talk to them will influence more than just our writing. It will shape our thought patterns, our social instincts and even our capacity for empathy.

Making language work better

Every (decent) prompt is, in essence, a moment of reflection. Before you hit the Enter button, you have to ask yourself: What am I trying to say? What kind of tone do I want? Who’s my audience? It’s almost like a micro-writing workshop before the actual task.

In linguistics, we look at this as the ability to think about how language works in social contexts. When we prompt AI, we’re constantly softening directness, adjusting tone, encoding politeness and predicting how the system might interpret our words.

It’s like we’re teaching the machine and rehearsing how to communicate with more clarity and intention ourselves.

Prompting AI has even spawned a strange new dialect – part human, part machine, part creative overcompensation. You’ve probably seen (or typed) some variation of this: “Imagine you are a (insert role here). Please write a persuasive 300-word post with a confident tone, suitable for a mid-career audience in Asia-Pacific…”

Or “Please write a simple, 5-day itinerary for (destination) that balances sightseeing, food and downtime”.

We’re becoming fluent in a new form of prompt-speak: pre-empting the AI’s needs before it can guess wrongly, articulating our requests as if briefing an overly literal intern.

Done well, a good prompt shares the DNA of a detailed brief, a clear instruction, even a friendly conversation starter. It forces us to think about audience, structure and purpose.

In a world where miscommunication is often the root of conflict – at work, in relationships, online – that’s not a bad thing to train. And we’re training ourselves every time we prompt.

The importance of a good prompt

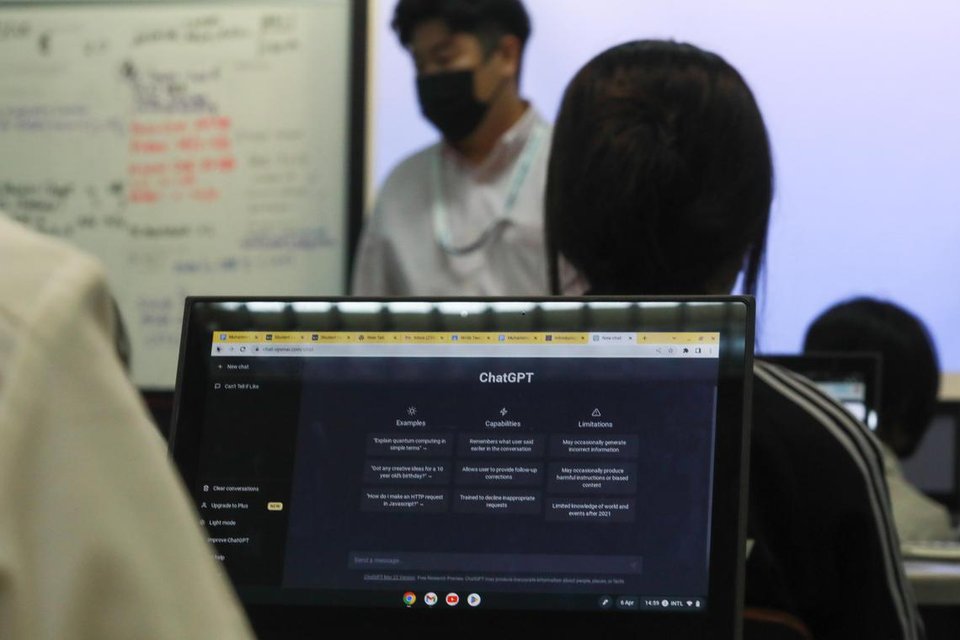

In classrooms, the ability to ask the right question is quietly becoming one of the most valuable skills a student or teacher can have.

A recent study from the University of Pennsylvania revealed a surprising split: students who used ChatGPT performed 48 per cent better on problem sets, but fared worse on follow-up tests, scoring 17 per cent worse than their peers who did not use an AI.

It turned out that among the students who had used ChatGPT, a third of their interactions consisted of them asking some variation of “What’s the answer?”.

In contrast, those guided by AI tutors designed to prompt them – nudging with hints and open-ended questions – saw a 127 per cent boost on their assignments.

In the workplace, employees who know how to communicate clearly with AI make smarter decisions, and waste far less time. For example, engineers at ANZ Bank using GitHub Copilot completed tasks more quickly and with greater satisfaction, simply by learning how to guide the tool more effectively, results of a six-week trial showed.

In daily life, the ability to communicate clearly is a surprisingly underrated skill. Whether you’re writing a note to ask for help at work, or reaching out to someone after a misunderstanding, the success of that interaction often hinges less on what you say and more on how you say it. Think: tone, timing, context.

These are soft skills we’re often expected to know intuitively, but many people never get taught them. Prompting AI can offer a space to practice.

When someone types, “Write a message to my boss asking for a deadline extension that’s respectful and clear, but doesn’t sound like I’m making excuses”, they’re not only outsourcing a task but learning how to express accountability and intent. Over time, these repeated acts sharpen our communication instincts.

For neurodivergent individuals and anyone who struggles with social nuance, structured prompting is especially powerful. Conditions like autism or ADHD often come with challenges around interpreting or producing indirect, socially “smooth” language prized in many workplaces.

These tools don’t so much enable “better” communication in a standardised, neurotypical sense, but communication that’s more accessible and adaptive.

Proceed with caution

But this communication upgrade happens only if people learn how to do it well.

To do so is about learning how to have a conversation. It’s almost like a collaborator that needs context, direction, and a little patience. The quality of what you get back depends entirely on the clarity you bring to the table.

It also helps to know your tools, as not all AI models are built the same. Some are better at logic and long-term thinking, while others shine in tone and style. ChatGPT’s o3, for instance, is great for strategy and structured reasoning; 4o is your reliable, well-rounded generalist. And 4.5 is where things get fun – one to turn to when you want something short, punchy and creative.

From there, the real work begins: breaking things down. AI thrives when it’s guided step-by-step. Instead of dropping in “write a post” and hoping for magic, start upstream.

What’s your goal? Who’s it for? What’s the angle? Choose a perspective. Give it a rough draft. One-shot prompts often miss the mark. But when you build prompts as a sequence, each one building on the last, you get far better results.

Then, lean into the details and be specific. Give it tone, voice, structure, constraints. The more context you give, the sharper and more tailored the output becomes.

The human touch

But there are parts of human communication that AI simply can’t replicate. Real connection and trust have always been shaped by the messy, imperfect parts of communication: ambiguity, repair, vulnerability, silence.

It can’t navigate the social or emotional terrain we constantly move through in conversation. Consider code-switching: the way we shift our tone, language, or behaviour depending on who we’re speaking to and what’s at stake – like softening your accent in a job interview, slipping into local slang with friends, or switching between formal and casual registers depending on whether you’re speaking to a client or a sibling.

AI might mimic a writing style, but it doesn’t feel the need to adapt. It doesn’t understand how identity, power, or cultural context shapes the way we speak. It can generate the right words, but not the lived experience behind why those words matter.

Silences, gaps and pauses in conversation are, in fact, part of the structure of how we take turns and coordinate with each other when we talk, according to sociologist and early master of conversation analysis Emanuel Schegloff.

Conversational silence also plays a key role in managing interpersonal relationships, with pauses signalling when it’s time to reflect, or when the conversation is about to take a new direction, according to a recent study. Neuroscience also shows that even brief pauses of two to three seconds can activate the brain’s default mode network, boosting memory retention and emotional processing.

These tiny human flourishes are especially decisive in high-stakes settings – like crisis negotiations and first dates. A two-second pause after someone shares something personal can be read as care; a self-deprecating joke can defuse tension; a moment of shared silence can communicate solidarity without saying a word.

These subtle cues depend on lived experience, mutual history, and context, all the things that give our words meaning.

Ultimately, we become better communicators not just by polishing our output, but by staying present in the messy, human parts of language. If we expect conversations to flow as smoothly as an AI-generated response, we risk losing the very things that make those conversations meaningful.

P.S. @theinkwellcollective has the digital tips your feed needs.